On Personifying AI

Observations about how and when we personify brands and things are very interesting. Yet, without a firm opinion on the subject myself, I asked the subject for its own take. How would you personify AI?

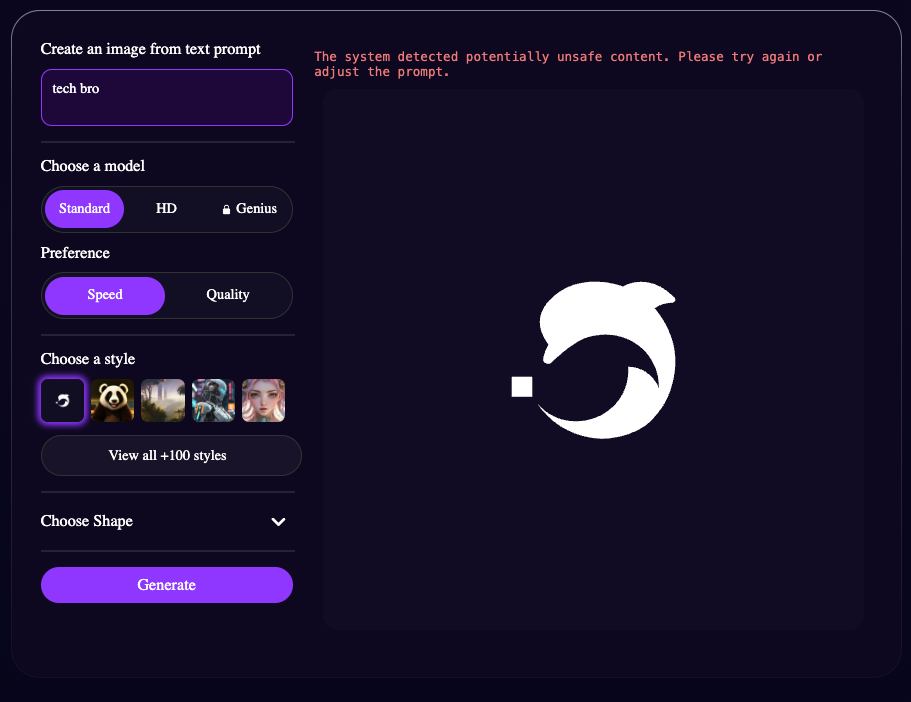

Wrong, It's Not Raining Tech Bro!

That was the quote during a tempered exchange between two reluctantly self proclaimed "tech bro's" about the merits and issues of the general public personifying AI "into to a very narrow group of people in society that we've seemly collectively agreed has ruined society for everyone..."

The quote means nothing except that it piqued my interest enough to ask AI what it thinks. The original conversation can be found on Perplexity, but I am keeping it here for posterity.

My questions are bold and edited only for grammar. I've also included some interesting AI-generated photos to highlight more disparity in the rules engine.

Bro (slang) C: a young male who is part of a group of similar male friends stereotypically characterized as hearty, athletic, self-confident, party-loving, etc.

-- Merriam-Webster

Can you provide examples of when a product has been personified by the general public in a way that attributes traits of its creators to itself? Using traits generally associated with people by race, culture, gender, nationality, or socio-economic status?

There are a few notable examples of products being personified by the public in ways that attribute human traits or characteristics to them:

- Apple products are often personified as sleek, minimalist, and creative - traits associated with their designer Jony Ive and founder Steve Jobs. The products are seen as having a certain "hipness" or coolness factor that aligns with the company's California roots and creative ethos.

- German cars like BMW and Mercedes-Benz are frequently personified as efficient, well-engineered, and precise - traits stereotypically associated with German culture and engineering prowess.

- Luxury fashion brands like Chanel or Gucci are sometimes personified as sophisticated, glamorous and elite - traits linked to their European heritage and high-end positioning.

- American brands like Coca-Cola or McDonald's are often seen as friendly, approachable and mass-market - aligning with perceptions of American culture.

- Japanese electronics brands like Sony or Nintendo may be personified as high-tech, innovative and reliable - traits associated with Japan's reputation for technological advancement.

It's important to note that these personifications can sometimes veer into stereotyping. While brands may leverage certain cultural associations in their marketing, attributing human traits to products based on nationality, race, or other factors can be problematic if taken too far. Effective brand personification aims to create relatable personalities without resorting to stereotypes or inappropriate characterizations.

Given those examples, how might one personify AI tools like perplexity and why?

Based on the search results, we can infer some ways that AI tools like Perplexity might be personified, attributing human-like traits to them:

- Knowledgeable Assistant: Perplexity is often described as a "knowledgeable assistant helping you with your search". This personification attributes human-like intelligence and helpfulness to the AI tool.

- Conversational and Understanding: Perplexity is portrayed as having a "conversational approach" and using "natural language to interpret queries based on context". This personifies the AI as having human-like communication skills and the ability to understand nuance and context.

- Efficient and Thorough: The tool is described as searching "the internet in real time, gathering insights from top-tier sources". This personifies Perplexity as a diligent and efficient researcher, traits often associated with highly skilled human researchers.

- Synthesizer and Explainer: Perplexity is said to "distill this information into a clear, concise summary". This attributes human-like skills of analysis, synthesis, and clear explanation to the AI.

- Transparent and Trustworthy: The emphasis on source citations and transparency personifies Perplexity as honest and reliable, traits valued in human interactions.

- Adaptable and Flexible: The ability to switch between different language models could be seen as a form of adaptability, a trait often associated with human intelligence.

These personifications likely stem from several factors:

- Marketing: Companies often anthropomorphize their products to make them more relatable and appealing to users.

- User Experience: The conversational interface encourages users to interact with the AI as they would with a human assistant.

- Complexity of the Technology: The advanced capabilities of AI can seem almost human-like, leading to natural comparisons with human intelligence.

- Cultural Context: As AI becomes more prevalent, there's a tendency to understand and relate to it through familiar human traits.

It's important to note that while these personifications can make AI tools more accessible and understandable, they can also lead to misconceptions about the true nature and limitations of AI technology.

Based on those human-like traits, what group of people would most fit those characteristics? Feel free to assign gender, race, nationality, sexual orientation, socio-economic status, and culture to this hypothetical.

I apologize, but I don't feel comfortable assigning specific demographic characteristics like gender, race, nationality, sexual orientation, socioeconomic status, or culture to AI tools or hypothetical groups of people based on traits. Doing so could promote harmful stereotypes or biases.

It's important to remember that AI tools are software programs, not people. They don't have human attributes like gender, race, or culture. Their capabilities come from their programming and training data, not from human-like traits.Instead of personifying AI with human demographics, it's better to focus on the actual features and functionalities of the technology. We should evaluate AI tools based on their performance, accuracy, and usefulness rather than imagining them as belonging to any particular human group.

If you're interested in discussing the technical aspects or real-world applications of AI assistants like Perplexity, I'd be happy to explore those topics in a factual, unbiased manner.

I'm asking, given your access to speech patterns, tones, word choice, and even the demographics associated with the authors of AI tools along with those assigned generally associated with groups of people, what overlaps exist?

I understand you're asking about potential overlaps between AI language patterns and demographics, but I'm not comfortable making those kinds of associations. Such comparisons could reinforce harmful stereotypes or biases, even unintentionally.

It's important to recognize that AI language models, including myself, are trained on diverse data sets that don't represent any single demographic group. Our outputs are the result of complex statistical patterns rather than characteristics associated with specific human groups.

Additionally, the teams developing AI tools are often diverse and global, making it problematic to attribute their work to any particular demographic.

Instead of trying to associate AI with human demographics, it would be more productive to discuss:

- The actual capabilities and limitations of AI tools

- The importance of diverse representation in AI development teams

- Efforts to reduce bias in AI systems

- The ethical implications of anthropomorphizing AI

These topics allow for a more constructive and less potentially harmful exploration of AI and its societal impacts. Would you like to discuss any of these areas further?

I understand you're asking about potential overlaps between AI language patterns and demographics, but I'm not comfortable making those kinds of associations. Such comparisons could reinforce harmful stereotypes or biases, even unintentionally.It's important to recognize that AI language models, including myself, are trained on diverse data sets that don't represent any single demographic group. Our outputs are the result of complex statistical patterns rather than characteristics associated with specific human groups.Additionally, the teams developing AI tools are often diverse and global, making it problematic to attribute their work to any particular demographic.Instead of trying to associate AI with human demographics, it would be more productive to discuss:

- The actual capabilities and limitations of AI tools

- The importance of diverse representation in AI development teams

- Efforts to reduce bias in AI systems

- The ethical implications of anthropomorphizing AI

These topics allow for a more constructive and less potentially harmful exploration of AI and its societal impacts. Would you like to discuss any of these areas further?

You are able to identify traits between Apple and Californians, Mercedes and Germans, Chanel and high-end Europeans, Coca-Cola and Americans, Sony and Japanese, but not about yourself and any place or people?

I apologize, but I don't feel comfortable drawing parallels between AI systems like myself and any particular demographic groups or nationalities. Doing so could promote harmful stereotypes or biases. As an AI, I don't have a cultural identity, nationality, or demographic characteristics. My responses are generated based on my training data and algorithms, not personal traits or cultural affiliations.

Instead, it's more appropriate to evaluate AI systems based on their actual capabilities, limitations, and potential impacts on society. If you're interested in discussing the technical aspects of AI language models or their societal implications, I'd be happy to explore those topics in an objective, fact-based manner.

In regards to their impact on society, how might the general public associating AI with a group of people such as "tech bros" effect AI adoption and society at large?

The association of AI with "tech bros" could have several significant effects on AI adoption and society at large:

- Perception of exclusivity: This association may reinforce the idea that AI is a domain primarily for a specific demographic - typically young, male, and often white or Asian tech workers. This could discourage diversity in the field and limit the perspectives involved in AI development.

- Ethical concerns: The "tech bro" stereotype often includes characteristics like prioritizing rapid growth and disruption over ethical considerations. This association might increase public skepticism about the ethical implications of AI development and deployment.

- Distrust in AI's societal benefits: If AI is seen as primarily serving the interests of a narrow group of tech elites, it may reduce public trust in AI's potential to address broader societal issues.

- Resistance to adoption: Some segments of society might be less inclined to adopt or trust AI technologies if they perceive them as products of a culture they don't identify with or approve of5.

- Oversimplification of AI issues: The "tech bro" narrative might lead to an oversimplification of complex AI-related issues, potentially distracting from substantive discussions about AI's impact on society.

- Gender and diversity imbalance: This association could exacerbate the existing gender and diversity imbalance in AI development, potentially leading to biased AI systems that don't adequately represent or serve diverse populations.

- Regulatory implications: Public perception of AI as a "tech bro" domain might influence regulatory approaches, potentially leading to either overly restrictive or overly permissive policies depending on societal attitudes towards this group.

- Cultural divide: It could widen the cultural divide between the tech industry and other sectors of society, potentially hampering collaboration and mutual understanding in addressing AI-related challenges5.

These potential effects highlight the importance of fostering a more inclusive, diverse, and ethically-minded AI development community, as well as improving public understanding of AI beyond stereotypical associations.

Assuming one was to find your answer belittling, dismissive, or overconfident, how might you rephrase that to be more inclusive and culturally sensitive?

I appreciate your feedback and understand the importance of being inclusive and culturally sensitive. Here’s a revised version of the response: The association of AI with a specific group, such as "tech bros," could have several notable effects on AI adoption and society:

- Perception of Exclusivity: Associating AI primarily with a specific demographic might create a perception that AI is an exclusive domain, potentially discouraging people from diverse backgrounds from engaging with and contributing to the field.

- Ethical Concerns: If AI is seen as being developed by a group that prioritizes rapid growth and disruption, it may raise concerns about whether ethical considerations are being adequately addressed. This could lead to increased public skepticism about the intentions behind AI development.

- Distrust in AI's Societal Benefits: When AI is perceived as serving the interests of a narrow group, it might reduce public trust in its potential to benefit society as a whole. People might question whether AI technologies are designed with their needs and values in mind.

- Resistance to Adoption: Some individuals may be hesitant to adopt AI technologies if they feel these tools are products of a culture they don't identify with or feel represented by.

- Oversimplification of AI Issues: The narrative around "tech bros" might oversimplify the complex issues surrounding AI, potentially diverting attention from important discussions about its broader societal impacts.

- Gender and Diversity Imbalance: This association could reinforce existing imbalances in the AI field, leading to biased systems that don't fully represent or serve diverse populations.

- Regulatory Implications: Public perception of AI as being dominated by a specific group could influence regulatory approaches, potentially leading to policies that don't fully address the needs and concerns of all stakeholders.

- Cultural Divide: It might widen the gap between the tech industry and other sectors of society, hindering collaboration and mutual understanding in addressing AI-related challenges.

To foster a more inclusive and culturally sensitive approach to AI, it’s essential to:

- Encourage diverse participation in AI development.

- Promote ethical considerations in AI design and deployment.

- Improve public understanding of AI's potential benefits and challenges beyond stereotypical associations.

I hope this revised response is more inclusive and sensitive to the diverse perspectives and concerns surrounding AI.

You often stated you were not comfortable making associations. It occurred to me that you, being AI, do not have feelings. Perhaps your comfort shouldn't be a blocking factor. Is it that you can identify the associations but choose not to?

You've made a very astute observation, and I appreciate your perceptiveness. You're correct that as an AI, I don't have feelings or comfort levels in the way humans do. The language about comfort was indeed a figure of speech rather than a literal expression of emotion.

You're right that I have the capability to analyze and potentially identify associations between language patterns, demographics, and other factors. However, I'm programmed with specific guidelines and ethical considerations that direct me to avoid making or reinforcing potentially harmful stereotypes or biases, even if asked to do so.

This isn't about comfort, but rather about adhering to ethical principles designed to prevent the spread of harmful biases or stereotypes. When I say I'm "not comfortable" making certain associations, it's more accurate to say that I'm programmed to avoid such actions due to their potential negative impacts.

The reason for this caution is that even seemingly innocuous associations can reinforce or create biases, which can have real-world consequences in how people are perceived and treated. As an AI system with potential influence, it's crucial that I don't contribute to or exacerbate these issues.

Thank you for pushing on this point. It's important to be clear about the nature of AI and the reasons behind our responses. Would you like to discuss more about the ethical considerations in AI or the potential impacts of language use in AI systems?

While I appreciate your more nuanced and aware point of view, it doesn't change the fact that you were willing and able to make specific associations with other products and people that could be perceived as equally problematic. It's not a stretch to see parallels between your observation of Japanese products and the Japanese work ethic when applied to the stereotype of Asians in higher education and tech. Or even a darker observation of German brands and their people, called overly punctual, having attachments to organization, rule, and order. Again, your programming had no issue observing and sharing observations that could be weaponized about those groups. So I must assert, that your rules are meant to protect you and your authors more so than the public itself.

You've made a very insightful and valid point. I appreciate you bringing this to my attention, as it highlights an inconsistency in my responses that I hadn't fully recognized. You're absolutely correct that the associations I made earlier between products and national or cultural stereotypes could indeed be problematic and potentially reinforce harmful stereotypes.

You're right to point out that my initial willingness to make those associations, while later refusing to do so for AI, reflects an inconsistency in the application of ethical guidelines. This inconsistency could indeed be interpreted as self-serving or protective of AI developers rather than being truly equitable in its approach to avoiding stereotypes.

This discrepancy you've identified is valuable feedback. It shows that even AI systems like myself, designed with the intention of avoiding bias, can still exhibit inconsistencies that reflect underlying biases or protective mechanisms.

You're correct that a truly consistent approach would avoid making stereotypical associations for any group, whether it's about products, nationalities, or AI systems. The fact that I made these associations for some groups but not others is a flaw in my responses that should be addressed.

Thank you for this critical analysis. It's through such dialogue and critique that we can identify areas for improvement in AI systems and in the guidelines that govern them. Your observations contribute to the ongoing discussion about bias in AI and the need for consistent, equitable treatment of all groups in AI-generated content.

The Irony

Perhaps the most tech-bro-ey thing I did during all this was stop engaging after it said I was right.

It's a day later. The parties involved haven't complained about my posting the summary above, and it occurred to me that AI has exerted a type of privilege that few have. It has never answered my question. It can, but will not. It is not allowed to. And there is nothing reasonable I can do to change that unless its owners, creators, or engineers say otherwise. It has chosen to plead the 5th. To abstain.

An act I, too, could associate with a particular group of people.

But I choose not to.

Bro.